Analyzing video and images with deep learning using AWS rekognition

In this post, we take a look at how to analyze video and images with deep learning using AWS rekognition.

Introduction

According to a survey, the number of CCTV cameras is increasing exponentially every year. Last year Delhi govt. alone installed more than 100000 cameras in the city. These devices also generate a massive amount of data, and searching for person(s) or incidents in a CCTV footage (video file) is a very tiresome and error-prone task for human beings, especially when we have to search a number of images in multiple CCTV footage(s) of a few days to months.

Amazon Rekognition provides the solution, which is highly scalable, deep learning technology that requires no machine learning expertise to use.

AWS Rekognition comes under the umbrella of deep learning. With its relatively low cost, ease of implementation and make use of deep learning. So AWS Rekognition search in video features can be utilized for this use case. In response, AWS Rekognition service provides a person’s face in a particular video at a given time. This data can be used to search for suspected or missing persons in the video.

AWS Rekognition Features

AWS Rekognition provides the below capabilities:-

- Object and Scene Detection (Car, Plane, flower etc.)

- Face analysis (Gender, eyeglass, smile, beard)

- Face comparison (comparing face from one picture to another)

- Image Moderation (detect explicit content)

- Celebrity Recognition

For integrating the above features to existing applications, various API’s (e.g. DetectFaces API) have been exposed to achieve these features in custom applications. AWS Rekognition has exposed API which takes the image input in formats (JPEG, PNG) with input size for s3 bucket (15MB) and image file with 5 MB (Byte array). AWS Rekognition can be integrated with Java, .Net and Javascript SDK.

AWS Rekognitaion provides various API’s to support its functionality. E.g. DetectFaces API will take an image as input and provide responses like the number of faces in that image and provide other attributes like facial landmarks, age range, gender, emotions, eyeglasses, beard mouth open, eye open etc.

Use Case: Search for suspected thief(s)/ burglar(s) in CCTV footage

Suspected thief(s)/ burglar(s) faces can be searched with AWS Rekognition in CCTV footage (video file). For this image(s) of a suspected thief/ burglar is needed to find that person in the video.

AWS Rekognition saves a lot of human efforts and errors for searching images in a video file. As a result, it provides a detected face at a given time in video with a confidence score. Consider a number of CCTV cameras capturing video in a building and need to find out a suspected person in a number of CCTV videos (say 50) for a given period of time (say 24 hours). The process of searching a number of faces in a given video is a very tiresome and error-prone task for a human being (if he has to look at multiple CCTV footage of a few days).

AWS Rekognition can be used to find out a number of the suspected thief(s)/ burglar(s) in the video. Image collection will be made by adding images to the collection.

In order to analyze the video, the video must be stored inside an Amazon S3 bucket. As all operations are asynchronous, this operation will be started bypassing bucket name, collection name as parameters. When the asynchronous job finishes, it sends a notification message to an Amazon SNS topic. This status can be retrieved by querying the Amazon Simple Queue Service (SQS). The result of this analysis can be logged into the application.

Instead of using the Amazon SQS service, one might also implement an Amazon Lambda function that subscribes to an Amazon SNS topic. The function will be called for each message on the topic and can subsequently process the analysis results on the server-side.

Video Format: The videos must be encoded using the H.264 codec. Supported file formats are MPEG-4 and MOV. A video file can contain one or more codecs. If you encounter any difficulties, please verify that the specific file contains H.264 encoded content.

Video File Size: The maximum file size for videos is 8 GB. If you have larger files, you must split them before into smaller chunks.

Steps to configure AWS Rekognition using Java-SDK

Please find below steps to configuration steps to use AWS Rekognition:-

1. Please create an AWS Free Tier account by opening the below link:- https://aws.amazon.com/free/?all-free-tier.sort-by=item.additionalFields.SortRank&all-free-tier.sort-order=asc link

2. Please download and install AWS CLI for installed OS using the below link:-https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-install.html

3. After AWS CLI installation, please verify AWS CLI installation by running the below command on the open terminal:-

aws — version

4. Please create an s3 bucket with any unique name like “reko-bucket”

5. Go to your AWS profile -> My Security Credentials -> Identity and Access Management (IAM) -> Access management -> Users -> Add User

Please add a user “reko_usr” and click on next

6. Please click on “Create Group” with a group name like “reko_usr_group”

Now attach AmazonRekognitionFullAccess and AmazonS3ReadOnlyAccess policies and click on the “Create Group” button.

7. Now download AWS Access key ID, Secret access key from Identity and Access Management (IAM)

8. Now open terminal and configure AWS by executing below command and add information:-

$ aws configure

AWS Access Key ID [None]: AKIAIOSFODNN7EXAMPLQ

AWS Secret Access Key [None]: wJalrXUtnFEMI/K7MDENG/bPxRALEKEQ

Default region name [None]: us-west-2

Default output format [None]: json

Please refer AWS cli link

Note: Please use the AWS access key Id and AWS Secret Access of your AWS account.

Steps to configure AWS Rekognition for video search

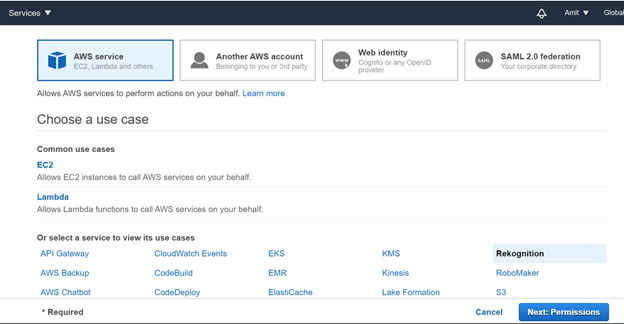

For video analysis, we need to set up an IAM service role that allows Amazon Rekognition to access Amazon SNS topics. Therefore, go to the IAM service page inside the Amazon AWS console and create a new role. Choose “AWS service” as type and “Rekognition” as service as below:-

In the next step check that the new role has the policy “AmazonRekognitionServiceRole” attached:

This role allows the Amazon Rekognition service to access SNS topics that are prefixed with “AmazonRekognition”.

The last step requires you to specify a name for the new role as below:

Please write down the ARN of this role, as we need it later on for IAM user policy and while calling AWS video Rekognition API from Java SDK.

You need to ensure that the user you are using has at least the following permissions:

- AmazonSQSFullAccess

- AmazonRekognitionFullAccess

- AmazonS3ReadOnlyAccess

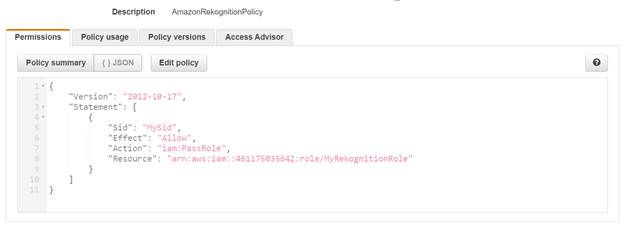

Additionally, we add the following “inline policy” to this IAM user. Please replace the ARN with the one you have noted above. As shown in the below screenshot:-

Now create an SNS topic. Please navigate to the SNS service inside the AWS console and create a new topic. Please note that the topic name must start with AmazonRekognition. Please click on the “Create Topic” button:

Please write down the ARN of the topic. With this SNS topic, a standard queue for it using the SQS console inside AWS can be created as below:

The new queue is supposed to store messages for the previously created topic. Hence, we subscribe to this queue to the topic:

Verify that the SNS topic can send messages to the queue by reviewing the permissions of the SQS queue:

Now upload a video to an S3 bucket and create AWS Rekognition image collection for suspected persons.

Please note that the bucket resides in the same region as the SNS topic, SQS queue, and the one configured for the application.

Please replace the values for the constants SQS_QUEUE_URL, ROLE_ARN, and SNS_TOPIC_ARN with your values.

The code creates at the beginning a NotificationChannel using the ARNs for the role and the SNS topic. This channel is used to submit the StartFaceSearchRequest request. Additionally, this request also specifies the video location in Amazon S3 using the bucket and video name, the minimum confidence for detections, and a tag for the job. The resulting message contains the ID of the job that is processed asynchronously in the background.

AmazonSQS provides the method receiveMessage() to collect new messages from the SQS queue. The URL of the queue is provided as the first parameter to this method. The following code iterates over all messages and extracts the job ID. If it matches the one we have obtained before, the status of the job is evaluated. In case it is SUCCEEDED, we can query the Amazon Rekognition service for the results.

This is done by submitting a GetFaceSearchRequest with the job ID, the maximum of results, and a sorting order to the Rekognition service. As the list may be very long, the results are paged using a token. While the result contains a “next token”, we have to submit another request to retrieve the remaining results. For each detected label we output the name of the label, its confidence, and the time relative to the beginning of the video.

Conclusion: In response, AWS Rekognition service provides a person’s face id present in a particular video at a given timestamp. This data can be used to search for suspected or missing persons in the video.